Cloud infrastructure provisioning - best practices for IaC¶

Infrastructure-as-Code (IaC) is a common DevOps practice that enterprises use to provision and deploy IT infrastructure. Enterprises applying IaC and continuous integration/continuous delivery (CI/CD) pipelines can maintain high availability and manage risk to their cloud environments at scale. However, multi-environment challenges, manual processes, and fragmented guidance still lead to configuration drift, errors, and inconsistencies. These issues are likely to result in downtime, security vulnerabilities, and inefficient resource utilization.

Successful enterprises establish and institute best practices early in the planning phase to reduce the probability of downstream deployment issues. This article provides a set of patterns and best practices that can be applied to help enterprises enhance reliability, security, and cost-effectiveness of their Azure cloud environments.

Practices that ensure secure, repeatable and reliable cloud infrastructure provisioning and deployments with IaC¶

Ensure consistency across environments¶

Ensuring consistency from the development environment through production reduces the risk of a failed deployment. A good approach to managing multiple configurations is through consistent folder structures that represent the environments. The structure should support easy identification of the different environment configurations. The same deployment pipeline can be used by merely swapping configuration values using pipeline parameters. An example would be having a folder structure with the environments as the top-level folder. Each folder can contain the same set of files per environment. New environments could be created by copying and renaming existing environments.

For example, with a dev, test, and prod environment, the folder structure could resemble the illustration below:

📂env

📂dev

📜01-init.json

📜02-sql.json

📜03-web.json

📂test

📜01-init.json

📜02-sql.json

📜03-web.json

📂prod

📜01-init.json

📜02-sql.json

📜03-web.json

Starting with a reliable pipeline enables the team to deploy required infrastructure resources to target environments and reduces errors from misconfiguration. The following guidance helps to ensure deployments are secure and repeatable.

Set up idempotent pipelines¶

IaC pipeline executions should be idempotent, and therefore produce identical results for each execution of the same IaC code. Deployed infrastructure should not be affected unless there is a change to the IaC code. To achieve this behavior use the state tracking mechanism of the IaC tool in use. Terraform uses a state file, while Bicep checks the current runtime state of the infrastructure.

Modularize deployments¶

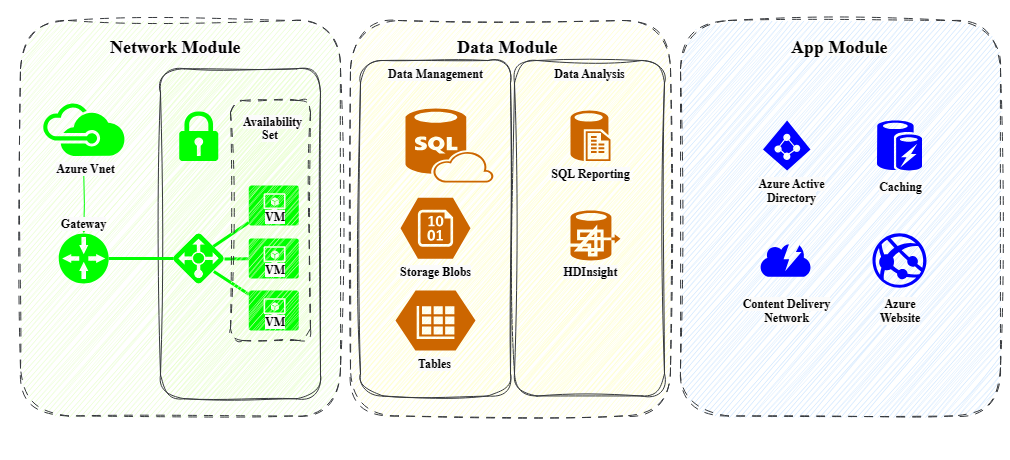

We recommend splitting the deployment into independent modules for complex infrastructures. Each module is responsible for a specific set of resources and configurations related to a single logical component. This approach allows for more modular, flexible and extendable deployments as the solution grows.

Employ a robust testing and quality check strategy¶

Even the smallest change can have a major impact on the deployed environment. When multiple teams collaborate on the same solutions, it is critical to validate all changes and verify that those changes do not break environments.

CI/CD pipelines unlock the ability to automate almost any task. Organizations extract more value from IaC deployments by automating code quality checks, and testing, to ensure minimal deployment disruption.

When determining which tests to integrate and automate, focus on changes that can cause a deployment to fail. Checks and tests include:

| IaC Test/Check | Description |

|---|---|

| Template validation | Enable users to detect invalid code early and shorten the development iteration loop. |

| Organizations standards validation | Validate and enforce standards specific to the organization. |

| Desired state tests | Verify that the IaC deployment was successful. |

| Preview Resources | Prevent unintended deletions. |

| Security Scanning | Identify known vulnerabilities and security misconfigurations. |

To give teams more confidence that changes do not introduce risk to production, tests and checks should be integrated into a CI/CD pipeline.

Linting and Validation¶

Implementing code linting and validation tools in a pipeline for IaC projects helps to identify and fix common issues in the code. To ensure code is always in a healthy state, linting and validation processes can be automated and integrated into a CI/CD pipeline. The linting process can also be used to enforce default organizational practices and coding standards.

Use code validation to ensure that code is internally consistent, semantically correct, and always deployable.

This practice improves readability, comprehension, and review, thereby reducing errors and improving maintainability of assets.

The following tools are options for linting and validating code. Depending on each team's evaluation of the stated advantages and disadvantages, team should pick the tool that best meets project requirements and fits workflow needs.

For Terraform:

| Linting Tool | Description | Language | Advantages | Disadvantages |

|---|---|---|---|---|

| Terraform Lint (tflint) | A Terraform linter focused on possible errors, best practices, etc. | Go | Pluggable, supports custom rules, actively maintained | Some configuration needed |

| Checkov | A static code analysis tool for infrastructure-as-code. | Python | Supports multiple IaC tools (Terraform, CloudFormation, etc.), checks for security best practices, supports custom checks | Might have false positives/negatives |

| Terrascan | Detects security vulnerabilities and compliance violations. | Go | Supports multiple IaC tools, extensive policy library (supports OPA) | Can be slow on large codebases |

| Tfsec | A security scanner for your Terraform code. | Go | Fast, checks for security best practices, easy to use | Limited to security checks |

| Terraform Validate | A built-in command in Terraform for checking the syntax of your code. | Go (built into Terraform) | No additional installation needed, checks for syntax errors | Limited, only checks syntax, not security or best practices |

| Terraform-compliance | A lightweight, compliance-focused, open-source tool. | Python | Scenario-based tests, easy to read, supports custom checks | Only for behavior testing, not a comprehensive linter |

And for Bicep:

| Linting Tool | Description | Language | Advantages | Disadvantages |

|---|---|---|---|---|

| Bicep CLI | A command line tool for validating Bicep files. | Go | Built into Bicep, no additional installation needed | Only checks syntax, not security or best practices |

| Bicep VS Code Extension | Provides linting, autocompletion, code navigation, and more. | TypeScript | Integrated into VS Code, easy to use, supports IntelliSense | Limited to users of VS Code |

| ARM Template Test Toolkit (arm-ttk) | Although not specifically designed for Bicep, it can be used to lint compiled ARM templates. | PowerShell | Can test for best practices, errors, etc. | Requires Bicep code to be compiled to ARM templates first |

Optimize resources¶

Resource efficiency and right-sizing cloud deployments is a major challenge as organizations attempt to scale solutions. Manually managing resources in different environments becomes increasingly difficult as the number of teams and projects increase. Insufficient resource expiration and destruction processes leads to considerable sprawl, making it difficult for engineering teams to manually identify and dispose of obsolete resources. This results in inflated resource cost and introduction of potential security risks.

To dispose of obsolete environments, an effective best practice is to implement a "destroy" pipeline.

The destroy pipeline is either:

- Triggered periodically or on a scheduled basis. Obsolete resources can be identified by using a combination of tags and metadata contained in the environment. For example, the pipeline could filter resources marked with a specific tag to determine whether the environment is still needed. If the environment is no longer needed, the pipeline disposes of it.

- Or triggered automatically by an action that marks an environment as obsolete. An example of an action could be merging a pull request that was validated in that environment. Alternate flows are supported by using tags that mark environments to be preserved. Preserved environments may be used to investigate or analyze defects.

Destroying an environment may be more complex than simply deleting the resource group with the nested resources that it contains. External dependencies could have also been configured during the provisioning exercise. For example, connections with external virtual networks, or the deployment of Directory objects. Such dependencies may not be cleaned up when the resource group is deleted. An effective destroy pipeline should be able to handle compensating resource management principals. Resource state management could be highly beneficial for this scenario.

Explore Ephemeral Environments¶

Maintaining long-term resources for testing purposes is both time-consuming and resource-intensive. This challenge can be addressed with ephemeral environments in the pull request process. Ephemeral environments are temporary and disposable, allowing for easy and efficient testing without the need to maintain and secure long-term resources. Using ephemeral environments also mitigates the risks of breaking existing environments during deployment or testing. They can be used to replicate the target production environment, including the same infrastructure, software, and configuration. Ephemeral environments enable more accurate testing and reduce the risk of unexpected issues when deploying code changes.

Remote State (Terraform specific)¶

In a multi-team or multi-developer environment, managing the state of Terraform resources is a challenge. Using a local state file to track the deployed resources state or source configurations can lead to drifts or conflicts in the infrastructure resources, creating delays and increased costs. Terraform remote state should be configured to allow multiple team members to safely share deployed infrastructure configurations in a read-only manner. This setup can be done without relying on extra configuration storage. Concurrent executions of Terraform commands against the same deployed resources are also supported.

Reference Example¶

The best practices in this article are codified and included in a repository referred to as Symphony. This quick start tool efficiently configures a new project with the best practices pre-implemented and ready to use or customize.

Symphony includes templates and workflows to help with:

- Automating deployment of resources using IaC

- Incorporating engineering fundamentals for IaC

- Resource validation

- Dependency management

- Test automation and execution

- Security scanning