Trade Study - Bulk Data Integration Solutions for Dataverse¶

Bulk data loading is the process of loading data in a single batch. Following this initial seeding, a delta update can be run on a schedule to maintain the data from the source system. As a one-way data load, this does not allow for updating the original data source. The most common use cases for this type of activity include:

- Seeding a Dataverse with initial data

- One-time uploading of simple datasets from a file

- Loading data from a data warehouse to the Dataverse via an ETL

- Large-scale data cleaning

Common tools used for bulk data loading include built-in platform tools, ISV built tools and Azure based tools such as Azure Data Factory.

Note: This trade study does not cover connecting Dataverse directly to another data source allowing for the flow of data bi-directionally and updating of the original data source.

Study Purpose and Use¶

This study should be used as a design and architecture foundation for a data integration with the Power Platform. This study will be the starting point in construction of a solution decision tree. By matching your requirements to the solution pros and cons, limitations, and expected scale, you will be able to match to a suitable solution pattern.

Intended Audience¶

- Developers

- Architects

This study makes the following system level assumptions¶

- Dataverse is the target data source for the bulk data integration

This study has the following system level constraints¶

- The Power Platform base application is augmented by other cloud and/or on-prem resources

- Dataverse is one of the primary data sources for data integration

Desired Outcomes¶

- Connect to unidirectional or bi-directional data

- Able to parse a specific or set of data formats

- Make the parsed data available to the Power Platform (either directly loading in the Dataverse or connecting and loading the data inline)

- When solution is complete, the client applications are able to consume the data using Power Apps

Key Metrics¶

To help you choose the right solution for your needs, we have included the following metrics:

Dataset size metric¶

| Small | Medium | Large | Large |

|---|---|---|---|

| 1-50 MB | 50-1 GB | 1GB-10GB | 10 GB+ |

Dataset complexity metric¶

| Simple | Medium | Complex | Complex |

|---|---|---|---|

| flat files with no relationship | hierarchical dataset with simple relationships | relational data with complex relationships between tables | relational data with complex relationships between tables and complex data types requiring data transformation |

Solutions Options¶

This list includes commonly used bulk data integration solutions for Dataverse.

Data Import Wizard¶

Solution Summary¶

Data Import Wizard is the original data ingestion tool provided with Power Platform. Data Import Wizard works best with small datasets to import one or two data tables. This tool is limited to use with text-based file formats. Typical use cases include importing simple single-table datasets like a conference attendee list or mailing list.

Key Findings¶

-

Pros

- Easy to use for users with variable technical skills

- Tool includes templates for reuse of common dataset uploads (e.g., conference attendee spreadsheet, mailing lists)

- Tool easily consumes any text file-formatted data.

- No-code solution (accessible for citizens developers).

-

Cons

- Designed for simple- to medium-complexity datasets

- Designed for small datasets (single zip file cannot exceed 32 MB)

- Limited scaling. This tool was never designed for large-scale data ingestion.

- Limited flexibility. This tool is not designed for complex data transformations. It is a simple data ingestion tool.

- Tool is limited to text file-formatted datasets (txt, csv, zip, xml, xlsx)

- No support for on-premises data sources (you have to bring text files to the cloud)

-

Recommended Use Cases

- Single sheet excel or csv files

- Single table data import

- Day-to-day data ingestion by non-technical users

- Creating small batch of seed records using built-in table examples (e.g., leads, opportunities, accounts, contacts)

- Small, simple text formatted datasets

Dataflows¶

Solution Summary¶

Dataflow is a built-in tool for Power Platform that allows users to clean, transform, and load data from disparate sources for enterprise use. A dataflow is a collection of tables managed from your Power Apps environment. Using dataflow, you can add data, schedule data refreshes, edit tables, and transform data using Power Query. You can then access your data by building apps, flows, Power BI reports, or connect directly to the dataflow's Common Data Model. You can do this by using Azure data services like Azure Data Factory, Azure Databricks or any other service that supports the Common Data Model folder standard.

Key Findings¶

-

Pros

- Easy to use for users with variable technical skill levels

- Dataflow connects to Dataverse, Dynamics 365 Finance, and other Azure data services.

- Variety of connectors built to Azure components and common data systems

- Can be templated for reuse

- Can be configured for incremental refreshes

- Complex transformation operations are done using Power Query and can be done by non-developers using a visual interface or by pro dev using M formula language.

- Multiple data sources supported

- Can be used to load data from on-premises data sources, using Gateway connectivity

- Can be added to Dataverse solution for version control and deployment

-

Cons

- Limited to small, medium size datasets

- Complex data transformations will require M formula language knowledge

- Use requires some basic data modeling knowledge and experience

- Declarative M formula language knowledge needed for more complex transformations

-

Recommended Use Cases

- Small to medium datasets with an available data connector

- Ingesting and connecting data from multiple data sources (e.g., two separate Dataverse instances or connecting Dataverse with Dynamics 365 Finance)

- Small to medium complexity datasets done by non-developers

- Complex transformations done by technical users with M formula language knowledge

Azure Data Factory¶

Solution summary¶

Azure Data Factory is the primary data ETL (Extract, Transform, Load) tool on Azure for data import or export from Azure data storage resources, including Power Platform/Dataverse. While not a low-code solution (users need to create data pipelines and ETLs), it can be optimized to enable import of large datasets in the shortest timeline available.

Key Findings¶

-

Pros¶

- Capable of handling the import of large datasets

- Can be used to import/export data from various data sources

- Handles complex data sources by transforming data in the pipeline using for example: DML statements, compute services such as Azure Batch or delegating logic to Azure Functions

- Pipeline based processing

- IaC (Infrastructure as Code) approach to data integration (can be version controlled using git hub)

-

Cons¶

- Users need ETL and DBA-level knowledge to create the import

- Fairly complex tool with a learning curve- use requires experience with data integration

-

Recommended Use Cases¶

- Step-up from Dataflows for large and large datasets

- Importing large datasets that require complex and optimized data transformations

- Data import projects undertaken by DBAs or non-platform implementation team

- Inserting data into Dataverse table having referential columns using two pass operation.

- Using DataFlow or Copy activity to ingest data in Dataverse table, a performance comparison

- Data Integration with external systems. Example: SAP Integration with Dataverse

Power Automate¶

Solution Summary¶

Power Automate is a built-in process automation tool for Power Platform that can be used to import and export data. Power Automate is able to enrich Dataverse data with data from upstream systems using triggers or by responding to events.

Key Findings¶

-

Pros¶

- Easy to use for users with variable technical skill levels

- Able to scale to meet small to medium datasets that require integration with other systems

- No-code solution, but uses declarative formulas

- Includes built-in functions for data manipulation (shared with Azure Logic Apps)

- Can be used on top of Power Apps licensing model

- Can be used to import data from on-premises data sources, using Gateway connectivity

- Can be added to solution for version control and deployment

- Can handle medium to complex datasets

-

Cons¶

- Power Automate is a shared system level resource. This means that Microsoft imposes service protection limits and throttle limits to ensure consistent customer service levels. Throttling issues can result for larger datasets.

- Poor process flow design can have significant impact on performance

- For professional developers, limitations in declarative formula style results in tool feeling less intuitive for use.

-

Recommended Use Cases¶

- Integration-focused data ingestion that allows to act on data change events

- Connecting to data systems that have an out-of-the-box connector

- Connecting to systems with an OData, REST, or SOAP endpoint

- A working Postman or swagger collection, in order to create a custom connector

- Running a scheduled data import

Azure Logic Apps¶

Solution Summary¶

Azure Logic Apps is a low-code Azure-based Process Automation tool. While Logic Apps and Power Automate have the same basic functionality and use cases, Logic Apps can be hosted on dedicated resources, depending on the billing model. Logic Apps charges customers for memory and compute resources without imposing service protection limits, which enables scaling for larger datasets at a higher customer cost.

Key Findings¶

-

Pros¶

- Easy to use for users with variable technical skill levels

- Able to scale to meet typical datasets

- No-code solution, but uses declarative formulas

- Includes built-in functions for data manipulation (shared with Power Automate)

- Able to scale to meet large datasets

- Can handle simple data transformations using build-in expressions

- Ability to use batch processing to improve performance

- Build on top of ARM templates, allowing for IaC approach to data integration

- Can handle large and complex datasets

- Can be used to import data from on-premises data sources, using Gateway connectivity

-

Cons¶

- Working with bigger datasets can be cost prohibitive

- Depending on the license used, resources are shared with other customers

- For professional developers, limitations in declarative formula style results in tool feeling less intuitive for use.

-

Recommended Use Cases¶

- Connecting to data systems that have an out-of-the-box connector

- Connecting to systems with an OData, REST, or SOAP endpoint

- Using an event to drive data integration

- Running a scheduled data imported

- Good for near-live data integration

KingswaySoft Integration Toolkit¶

Solution summary¶

Kingswaysoft connector for SQL Server Integration Services(SSIS)/ADF(v2) is a toolkit from a third-party vendor (Kingswaysoft) that provides a connector to the Dataverse, and allows for building integrations with the Power Platform. The toolkit can be used with either SQL Server SSIS or ADF(v2). The toolkit is built on the XRM core and allows the import to have multiple threads and multiple connections to increase the throughput and open up the import for large datasets. This toolkit by itself will not import data but is part of an overall solution to import data. When used properly and efficiently this tool can speed up the time to go live with your Power Platform implementation.

Key Findings¶

-

Pros¶

- Is built for both SSIS and ADF use

- Will aid in large datasets

- Will aid in complex datasets

- Good for exporting on-premises data to the Cloud

-

Cons¶

- Part of a full code solution

- Need advanced knowledge of SSIS/ADF to use this tool

- Fairly complex in fine-tuning. To achieve high throughput requires a good understanding of the tool and the data being imported

- Cost of additional licensing

-

Recommended Use Cases¶

- When using SSIS/ADF as the project's integration tool.

- Good when working with relational data, especially stored in SQL Server

- Good for migrating on-premises data to the Cloud

Custom Code¶

Solution Summary¶

Custom code is the highest-code alternative for data integration tools to bring large volumes of data from other systems or for end users to import large datasets into the platform. In a custom code solution, the implementation team would write a custom import routine to handle both the ETL and platform connectivity. Developing a custom code solution requires consideration of many factors, including network infrastructure, bandwidth, dataset size and complexity, security and securing the data import channel. Microsoft Dataverse Web API or Dataverse Service Client can aid in custom solution development, but you should still consider available resources, timeline, internal infrastructure, and dataset scale when weighing the efficacy of this option.

Key Findings¶

-

Pros¶

- Precision fit to project requirements

- Fine-tuning for max throughput

- Ability to log and provide high observability

- Reusable for delta's and seed

- Ability to use to code your own high throughput integration codebase

-

Cons¶

- This option uses a library that you'll need to later build on top of

- Resource and code intensive work required

- Users must follow a Software Development Life-Cycle process to ensure code is high quality and tested

- No support for on-premises data sources, unless implemented by the project's team

-

Recommended Use Cases¶

- Importing large or complex datasets: you can build your own tooling to handle the import

- Should be used if you require your own codebase to data integration process and other tools are not a fit or not allowed by your organization

-

Samples¶

Here is a sample implementation that uses custom C# code hosted on Azure App Service to perform bulk data import from an excel into Dataverse: Power Platform and Azure App Service sample for bulk data import into Dataverse

The solution contains a Power Platform base application whose capabilities are extended using Azure. Power Apps model-driven app is used as the presentation layer for downloading/uploading excel files, monitoring progress of bulk data import, and viewing validated and transformed data. Power Automate flow is used to orchestrate the entire bulk data import process. It is triggered on upload of excel and calls APIs hosted on Azure App Service. Azure App Service runs custom code that performs excel data parsing, runs complex business validations, and does bulk data load into multiple tables in Dataverse.

The sample uses a Dataverse Rest Client, which is a .NET 7 library that provides a wrapper around the Microsoft Dataverse Web API, for all interactions with Dataverse. The library provides important capabilities that help in interaction with Dataverse Web API such as authentication, OData query, batch requests, conversion of response to POCO class objects, entity metadata, and resilience and fault handling.

The solution also demonstrates different approaches to tune performance of bulk data load into Dataverse:

- Batch requests to Dataverse Web API to perform multiple operations in a single HTTP request.

- Combine batch requests with threading to process larger datasets even faster in parallel batches.

Microsoft Power Platform CLI¶

Solution Summary¶

Microsoft Power Platform CLI is a command-line interface that allows you to automate the creation of Power Platform solutions. One of the commands included is pac data import command. This allows import of multiple flat files that represent Dataverse table records.

Key Findings¶

-

Pros¶

- Data import is done in a single command

- Can be used to import multiple files in a single zip file

- Useful for importing data from a CI/CD pipeline

-

Cons¶

- Can only be used for small and simple datasets

- No support for on-premises data sources

- Not designed for performance

-

Recommended Use Cases¶

- Useful for importing configuration data during a CI/CD process

- One time small data migration between Dataverse environments

- Can easily create export data package using

pac data exportand Configuration Migration Tool

Additional Considerations¶

Accessing On-Premises Data¶

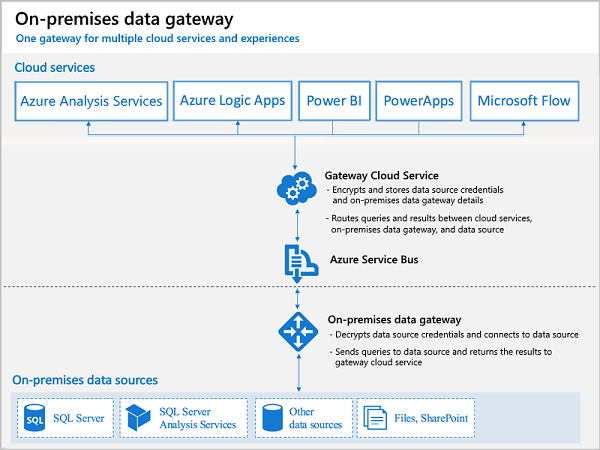

In order to access data stored in on-premises, Power Platform provides a dedicated an On-Premises Data Gateway. It provides quick and secure access to your local data for services such as:

- Power Automate

- Power Apps

- Azure Logic Apps

Use of the Gateway brings also some technical limitations that are described here

For data ingestion solutions, where Data Gateway is not available, Azure Relay, Azure VPN Gateway or Azure Private Link can be used.

Summary¶

The following table summarizes the different options for importing data into Power Platform.

| Solution | Dataset Size | Dataset Complexity | Can use On-Premises Data Getaway | Cost | Recommended Use Cases |

|---|---|---|---|---|---|

| Data Import Wizard | Small | Simple | No | Built-in Dataverse Feature | Non-technical users day-to-day use |

| Dataflow | Small-Medium | Medium-Complex | Yes | Included in Platform | Non-technical users day-to-day use |

| Azure Data Factory | Large | Very Complex | No (use Azure Private Link) | Azure subscription required | Importing and transforming large and complex datasets |

| Power Automate | Small-Medium | Medium-Complex | Yes | Included in Platform | Small to Medium integration scenarios that low-code developers can handle |

| Azure Logic Apps | Large | Medium-Complex | Yes | Azure subscription required | Large to Very Large integration scenarios |

| KingswaySoft Integration Toolkit | Large | Very Complex | No | Paid | When using SSIS/ADF as the project's integration tool. Especially for on-premises to cloud migrations |

| Custom Code | Large | Very Complex | No (Custom solution required) | Free | Importing large, complex datasets. You can build your own tooling to handle the import |

| Microsoft Power Platform CLI | Small | Simple | No | Free | Useful for importing configuration data during a CI/CD process |